Kinect for artists

Animata – OSC – MoCap – Stuff

On February 1, 2012, I gve a talk at Tiny Army about using the XBox 360 Kinect to drive 2D and 3D animation software.

This is a write-up of my notes and slides.

There are all sorts of details I've omitted because they didn't really figure into the actual talk, and describing how to really make use of the assorted sowftware from my talk could each be a talk of their own.

I'm thinking of writing all this up in more detail (plus covering more tools and stuff) and publishing this as an E-book. If you would be interested in such a book please let me know and I'll keep you informed. (Drop me a line at james@neurogami.com).

Also, if you think there's an error in this please let me know. Thanks!

Code for the talk is being uploaded to the Neurogami GitHub account

What is the Kinect?

The Kinect is an XBox 360 hardware add-on made by Microsoft.

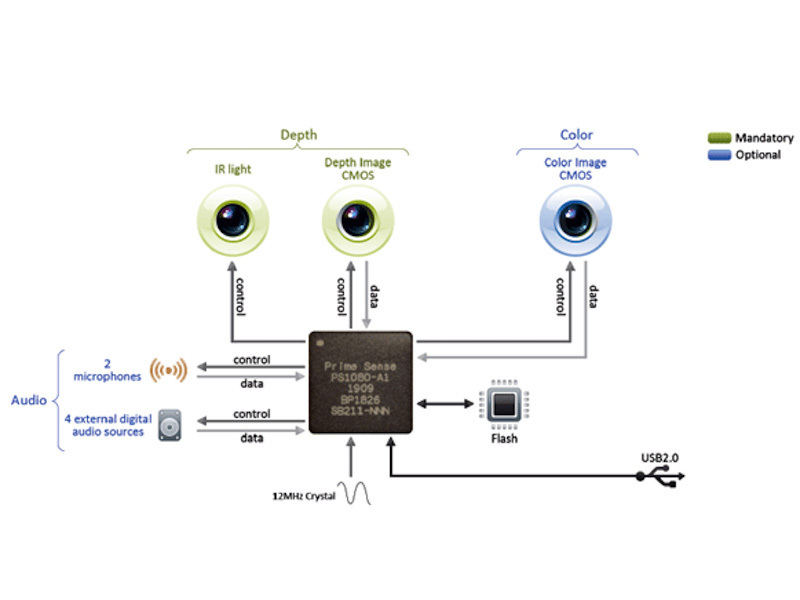

It uses multiple cameras and infrared sensors for depth detection and skeleton tracking.

The initial version was released as an XBox 360 add-on.

Version one costs $150, or $100 if you catch a sale.

There's now a second version (Kinect for Windows) that costs $250.00. It will get you some better official support from Microsoft, but if you're using the Kinect with non-Microsoft software there appears to be little benefit for the cost.

This talk used an XBox Kinect. I don't know if the current open-source Kinect software works with the newer Kinect.

Using free, open-source software you can make use of the Kinect from a standard computer running Windows, Linux, or OSX; you do not need to buy an XBox.

Hardware

The Kinect itself is a black bar sitting on a motorized stand. You can tilt it up or down. There are assorted sensors for RGB color data, and infrared devices for detecting depth data.

See also: www.linuxjournal.com/content/kinect-linux

Kinect hardware. From: www.ifixit.com/Teardown/Microsoft-Kinect-Teardown/4066/1

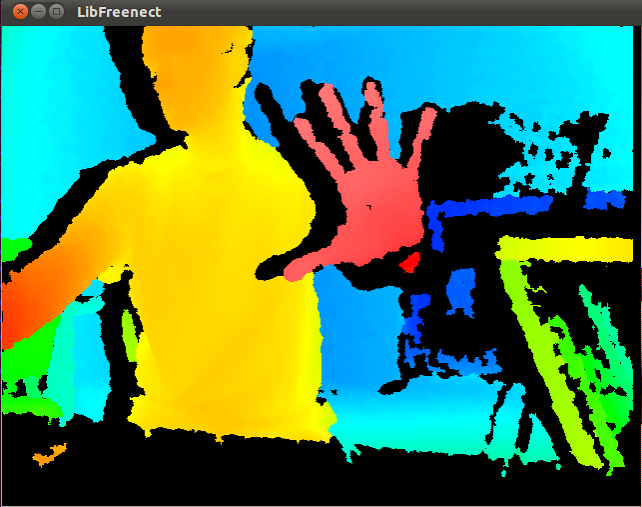

Depth data. From: http://old.vislab.usyd.edu.au/blogs/index.php/2010/11/23/kinect-depth-map?blog=61

Depth Data

- Range: 50 cm to 5 m

- Rez: ~ 1.5 mm at 50 cm. About 5 cm at 5 m.

Factoids glommed from: http://stackoverflow.com/questions/7696436/precision-of-the-kinect-depth-camera

Depth data is often rendered in sample programs using different colors, like topological maps.

In this picture the close an object the more red it is.

![]()

Skeleton tracking. From: http://vimeo.com/26374492

The Kinect can detect up to two skeletons (but up to six bodies), though what you can actually do will depend on the software you're using.

The tracking locates certain joints and follows those. It works best when there's a full view, but is often pretty robust when there's some oclusion.

Animata

I gave a demo of controlling Animata using the Kinect via OSCeleton

It's developed by some people at Kitchen Budapest (AKA “The spicy innovation lab”) who do assorted very cool artist-hacker projects.

Animata is free, and open-source, and theoretically runs on Windows, OSX, and Linux.

I've had best results on Windows though I've not really tried using it that much on my Mac.

With Animata, you take png images and create a mesh of points and vertices covering the areas of interest.

You can then create a skeletal structure of joints and bones, and attach sections of the picture mesh to particular bones.

Then, if when you move the skeletal structure the mapped image moves with it.

You can see this in the videos on the Animata page (or at the Kitchen Budapest YouTube page or Vimeo page).

Images sit in (or on) layers. You can have multiple layers in a project, and the layer order defines image overlap. Layers can have multiple images.

You can control the opacity of layers, and also toggle visibility.

I've had problems under indeterminate conditions where multiple layers cause Animata to crash. I think this is related to some graphics card setting for OpenGL rendering.

Mechanical animation

Images mapped to skeletal structures can be made to move automatically. When defining the structure you can set points as being fixed to a specific location, and you can also set bones to automatically expand and contract.

You end up with a sort of piston machine, with bones growing and shrinking, moving about their joints.

Animata Quirks

Animata has a number of quirks

- Tedious

You have to place the points and vertices and make sure you correctly cover the image. Getting the triangle pattern right can be tricky. The lack of undo means screwups are hard to correct The hardest part is placing points, mesh lines, joints, and bones so that when you map mesh to bones you cover everything once and cover the entire image.

If you omit a mesh point and then move the bones, then that mesh point stays fixed while the others move, and getting things back to the right place is like sculpting mercury. Your best bet is to save a copy at known OK versions (either use version control or just copy off version with different names) and reload a previous good version when you break something.

- No “undo” command :(

- Crashy (sometimes)

- Also …

When you try to use OSC commands via OSCeleton you need to do some scaling depending on the size of the skeleton, and the size of the screen, and it's hard to get right.

One useful thing: You can import Animata layers into other Animata scenes, and you can swap the images used. So if, for example, you create a single-layer character you can later import that into another project, and alter the images used (assuming they map correctly to the bones and such). This is a serious time-saver.

OSCeleton

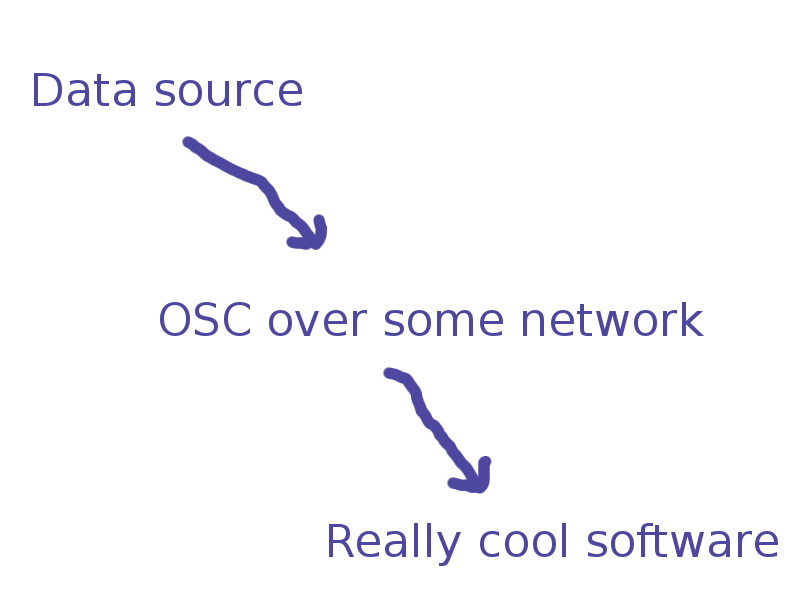

So how is the Kinect talking to Animata?

There's a program called OSCeleton that waits for the Kinect to locate a user, gets the skeleton data, and sends it over a network using a format called OSC. Animata is designed to listen for these OSC commands. What this means you can control Animata from any other souce that can send OSC commands, which is how I was able to manipulate the layers.

OSC is short for Open Sound Control, which is sort of like MIDI on steroids.

It works over a stand network so, unlike MIDI you don't need special cables.

The key magic of OSC is that it's a general format, and you can define your own messages. OSCeleton is designed to send commands for certain joint controls.

The magic of OSC

What makes OSC so handy is that it acts as a sort of universal language

OSCeleton only handles the body joints. But since Animata can handle other OSC commands, and doesn't care where they come from, we can fill in the gaps using other tools, such as Wii controller (albeit with some special software) or TouchOSC, an app for iPhones and Androids.

Also worth noting is that OSCeleton isn't tied to Animata; the Kinect skeleton data send from OSCeleton can be used by other applicatoins as well.

The Animata OSC handlers

- /anibone

- /joint

- /layervis

- /layeralpha

- /layerpos"

- /layerdeltapos

Getting set up

When the Kinect was released it was intended by Microsoft to be used only by when attached to an XBox. But other people were excited by the idea of using it for other purposes, attached to a common computer.

A company named Adafruit Industries offered a bounty to the first person or team who could come up with some software that made a Kinect usable outside of the XBox. The reward was granted within hours of the Kinect release.

Some time later Microsoft saw which way the wind was blowing and released their own official Kinect development kit.

You can read the history here

The open-source code runs, in theory, on Windows, OSX, and Linux. In practice it can be tricky to get everything working just right. It's somewhat complicated by there being two open source projects for basic Kinect interaction; I've found that on Windows they do not play well together and you have to make a choice of one or the other.

Mostly this is not a big deal, but some programs designed to work with the Kinect require specific libraries.

I've set up Kinect software multiple times on different Windows and Linux laptops, with varying difficulty.

When I had a problem and went looking for help the answer always seemed to start with “First, remove all currently installed Kinect software.”

The simplest way to get a machine running is to use a Windows box and the Brekel All-in-one OpenNI Kinect Auto Driver Installer, which is a single program that handles downloading and installing the needed libraries.

Motion Caption

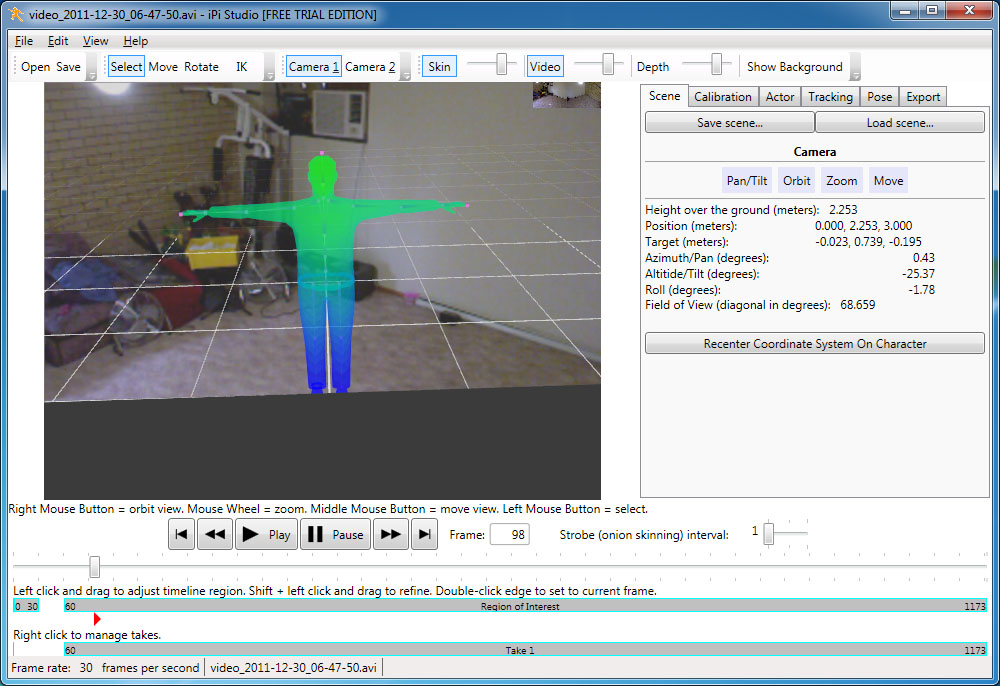

iPi Studio

My initial interest in Kinect and animation was with Animata. But while I was putting together this presentation Daniel Davis forwarded to me some E-mail about some motion capture software.

The software used was iPi Desktop Motion Capture .

It's quite slick, kinda maybe pricey , but it can work with one or two Kinects.

There are three versions: Express($395), basic ($594), and standard ($995). Differences seem to be mainly about how many cameras you can attach and the viewable area you can capture.

There's also a 30-day basic edition free trial.

I have just the one Kinect and gave the trial version a whirl. It's pretty easy to use, there are no markers, you basically just do your thing.

With the one Kinect there are going to be blind spots; two Kinects will presumably capture a full body. The previous Little Green Dog link shows the results of using two Kinects.

Even with the one the results were interesting. The process works like this: You capture video using a free, separate program called iPi Recorder. It works with Kinects as well as Sony PlayStation3 Eye cameras and DirectShow-compatible webcams (USB and FireWire).

Clear a large enough space (20-foot square?); I used my living room. Set up the Kinect (attached the the machine running iPi recorder, of course) at one end.

Stand far enough back so that your entire figure is in frame.

Start the recorder and record a few seconds of background.

Then, walk into frame, and adopt a “T” pose for a few seconds.

Do your ninja dance moves.

Stop the recorder when done and save the resulting video.

You then load that video into iPi Studio.

iPi Studio. From: http://www.ipisoft.com/forum/viewtopic.php?f=6&t=5724

You map a T-posed avatar model to that in your video, adjusting placement and scaling until the body parts match. Since you started the video with no figure the software is able to subtract that part and focus on the figure.

The software then goes through the video and converts the 3D data into motion cpature info.

It can take a while; it took about 45 minutes to process a few minutes of mocap on my laptop.

This can be exported to assorted formats, such as bvh (Biovision Hierarchical motion capture data) that can be used in rendering programs such as Blender.

For whatever reason the resulting figure was rotated face-down. I needed to use another tool, BVHacker, in order to correct this.

Brekel Kinect

Brekel Kinect is a free application that is similar to iPi Studio.

It's quite not as stupid easy to use. But it's free. The software handles both the recording of motion and the export to a mocap data file (e.g. bvh).

The resulting figure had some different shaoes than what iPi Studio produced, and I again needed BVHacker to make some positioning corrections.

Using depth data

Processing and blob detection

Animata and the mocap tools use skeleton data, but you can also use depth data by itself as a control source for other tools.

This is where it gets a little geeky, since I've not used any software that simply works out-of-the-box with Kinect depth data (aside, of course, from the object positioning of the motion capture software).

Since most software knows nothing of Kinect I looked to use OSC again as an intermediary means of communication.

This meant writing or obtaining software that can grab the depth data, makes some sense of it, and emit useful OSC messages to some end application.

Usually my first choice for a programming language is Ruby, but I'm super lazy so if someone has already done the hard stuff but in another language I'll seriously look at that language.

It so happens that there's a lot of interesting work being with a language called Processing. Processing was created as a way to get artists and musicians to work with software and computers without having to first go get a CompSci degree.

Under the hood it's really using the Java programming language, but it hides that away in a nice, simplified abstraction layer. But since it's Java under the hood it means people can use Java stuff from Processing, and a few people have taken some of the Kinect code and made it usable right from Processing.

Processing would be a topic by itself for a whole other talk and I'm going to skip explaining the codey part. The key parts are:

- There's some library that gets data from the Kinect

- There's some Processing wrapper to get that data into your Processing program

- There's some other cool code someone else wrote that does neat things with that data, such as blob detection.

- You Processing app uses that data to emit OSC commands

Note: I still need to gather up the code I wrote for the talk and post it someplace

I used Antonio Molinaro's Blobscanner Processing library, plus Daniel Shiffman's code for reading depth data, so that I could detect when something (my hands, for example) were within a given depth range. I broke the display screen into quadrants, and on each loop sent out an OSC message based on how many blobs (one or two) and in what quadrants. For example, a single blob to the right of the screen generated a /right message.

Kinect and SketchUp

Embedding OSC-capable code

Once there was a way to turn depth data into OSC message I could can direct those messages to whatever other software can make sense of them. Sadly this demo was not working when I used the local network created by tethering off my phone (though it worked really well at home).

There are a few ways you can do this, depending on whether your target application has native OSC support.

I've been poking around with Google Sketchup for a while and it has the very nice feature of being

scriptable with Ruby. I snarfed some OSC ruyb code, hacked it a bit to play nice in SketchUp, and used a plug-in to get SketchUp to load additonal Ruby libraires so that it would be able to handle socket communication. (SketchUp comes with a stripped-down version of Ruby but you can munge $: to include additonla library folders.)

So now I have a SketchUp plug-in that can accept OSC messages and turn them into SketchUp commands. (Note to geeks: one of the commands allows for dynamic loading of OSC-handling code so you can change or update what messages are processed without having to restart SketchUp.)

Kinect and LightUp

Cheat: Turn OSC into keyboard commands

Not all software lets you embed your own code, or has built-in OSC options, so another path (on Windows, at least) is to use AutoIt to convert OSC into keyboard and mouse commands. Now you can control pretty much anything.

Adam Billyard has written a add-on for SketchUp called LightUp that creates amazingly realistic lighting for SketchUp scenes. I like for the amazing unrealistic things it can do (for example).

Aside from doing rendering inside SketchUp, the add-on lets you export your results to files that can be rendererd using the free standalone program, LightUp Player. You can use the keyboard and mouse to move in and around these renderings, and with the AutoIt OSC bridge in place, plus the Processing blob detection program, you can do so using the Kinect.

The Recap

- Kinect – Skeleton and depth data

- Some programs (e.g. iPi Recorder) have built-in Kinect communication

- Some programs are designed to handle OSC, which can be used as an intermediary format for Kinect data

- Most programs don't have built-in OSC support

- For some, you can add it

- For others, you can use something like AutoIt to convert OSC to mosue and keyboard actions

Finally: If you have some means of getting a program (or whatever) to respond to OSC, you can use any number of things to generate that OSC.

Some random links:

- http://urbanhonking.com/ideasfordozens/2011/02/16/skeleton-tracking-with-kinect-and-processing/

- Glovenect: Kiect + Glovepie – http://kinect.dashhacks.com/kinect-hacks/2011/04/27/using-kinect-inside-glovepie-emulate-any-input-device

- BTW: Glovepie only works with the Microsoft Kinect SDK :(